Provider for Apache Airflow 2.x to schedule Apache Mesos

Using my Apache Mesos Provider for Apache Airflow, the Airflow DAG’s and tasks can be run in an Apache Mesos cluster. By using autoscaling with the CloudHoster, theoretically unlimited resources are available.

The installation is done via:

pip install avmesos-airflow-provider

The following must then be added in the Airflow configuration:

[core]

executor = avmesos_airflow_provider.executors.mesos_executor.MesosExecutor

[mesos]

mesos_ssl = True

master = master.mesos:5050

framework_name = Airflow

checkpoint = True

failover_timeout = 604800

command_shell = True

task_cpu = 1

task_memory = 20000

authenticate = True

default_principal = <MESOS_MASTER_PRINCIPAL>

default_secret = <MESOS_MASTER_SECRET>

docker_image_slave = avhost/docker-airflow:v2.1.2

docker_volume_driver = local

docker_volume_dag_name = airflowdags

docker_volume_dag_container_path = /home/airflow/airflow/dags/

docker_sock = /var/run/docker.sock

docker_volume_logs_name = airflowlogs

docker_volume_logs_container_path = /home/airflow/airflow/logs/

docker_environment = '[{ "name":"<KEY>", "value":"<VALUE>" }, { ... }]'

api_username = <USERNAME FOR THIS API>

api_password = <PASSWORD FOR THIS API>

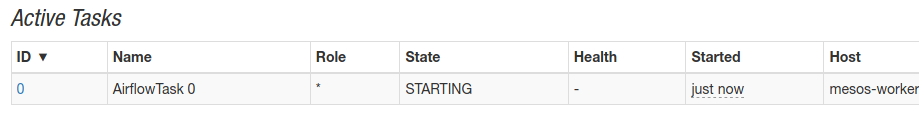

Airflow Task scheduled in Mesos

AWS Autoscaler for Apache Airflow and Apache Mesos

Since the Autoscale service of AWS is not ideal for Airflow long-running jobs, we have written a program which creates EC2 instances as soon as Airflow DAGs hang in the queue for too long. Our mesos-airflow-autoscaler launches predefined EC2 instances and terminates them again as soon as there is no Airflow workload. The prerequisite is AMI is used, which automatically connects to Apache Mesos after startup and integrates itself as a Mesos agent.

More Projects

- Provider for Apache Airflow 2.x to schedule Apache Mesos

- Mini Cluster - Docker Extension

- Docker Volume service RDB (deprecated)

- Docker Volume service S3

- M3s, the Kubernetes Framework for Apache Mesos

- Mesos-Compose Framework for Apache Mesos

- Firecracker Executor for Apache Mesos

- Provider for Traefik to use Apache Mesos